Technology - Google News |

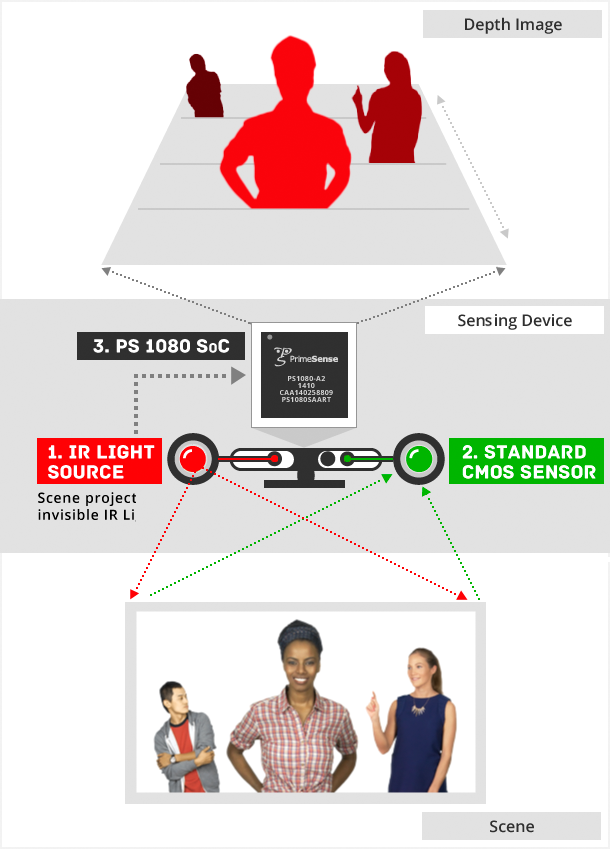

| Why iPad Pro's LiDAR is a big step for Apple in computer vision and AR - AppleInsider Posted: 26 Mar 2020 08:42 AM PDT The new LiDAR camera sensor that debuted in Apple's latest iPad Pro this week is an enhancement upon the depth sensing TrueDepth front-facing imaging array that first made its appearance on iPhone X in late 2017. This year as it arrives on iPhone 12, LiDAR appears to set to achieve a massive installed base of tens of millions of users. Here's why that's important.  Using iPad Pro LiDAR to bring Apple Arcade's "Hot Lava" to an AR experience Pay Back to the FutureApple is often chided for not being the first company to roll out new technology. Three years ago, I addressed this in the editorial When Apple is 2 years behind you, put your things in order, which noted that "there is a solid decade of evidence that, for Apple, being behind is a competitive advantage." Over the past year, for example, various Android enthusiasts have tried to make a big deal about the folding OLED displays debuted by Samsung, Motorola, and Huawei—despite their staggering cost and very limited real benefit, greatly outweighed by significant downsides including vastly increased fragility and the loss of water resistance. It's no mystery why folding phones are still priced in the stratosphere. All of these companies were already just barely selling their flagship premium phones priced above $500, let alone these folding concepts priced north of $2,000. The price of folding displays will have to radically drop before any significant number of consumers adopts them, and prices don't come down just because new generations are being offered. They have to actually be bought up in quantity to bring prices down, because profits enable more efficient and better iterations of new products to achieve lower prices. That's simply how component pricing works. There is also no large, inherent demand for folding devices; we all moved from flip phones to the iPhone form factor because of the huge jump in functionality possible with a large touch screen. We aren't moving back out of some bizarre nostalgia for a "click" sound paired with 2003-era functionality, no matter how many writers line up to carry water for Motorola and Samsung's marketing groups telling us up is down, and that basic flip phones are so much fun to click open and shut that they're worth $1,500 more than just carrying around a pair of coconut shells with your iPhone. There's a big difference between valuable new technology and a frilly gimmickEven technologies that promise offer significant advantages —such as emerging 5G mobile networks —have benefits that are tied to dependencies ranging from the actual availability of 5G networks to the extra cost required to access that faster network capacity. The only thing that will bring 5G modem pricing down is the emergence of high volume demand for 5G phones. As we saw from the rollout of 4G, that can only occur if the market invests in advance to deliver volume sales. Get too far ahead of that demand, and you end up having bet on a large inventory of early generation tech that you're losing too much money on to continue building the future. That was a major problem for Motorola and HTC. Back in 2012, Apple could belatedly adopt 4G after allowing its Android competitors to race in and dabble with first-generation 4G components. Only after 4G networks were becoming broadly available did Apple place its own high-volume orders that brought down its component pricing dramatically. That allowed Apple's first 4G LTE iPhone 5 to compete favorably with Android makers who already had years of 4G phones under their belts. iPhone 5 was not the first 4G phone by a large margin, but it was the most profitable by a large margin. Apple pursued a similar strategy with Apple Watch, which delivered micro-sized silicon for wearables that were solidly ahead of its competition, despite Android Wear, Galaxy Gear and other platforms jumping into the smartwatch business multiple years ahead of Apple. Apple accomplished this through a media blitz that successfully sold large volumes of Apple Watch, funding rapid annual iterations that could get much better, far faster than all of the various watch concepts floated out in dabbling volumes that couldn't pay for their future. That was only possible because Apple did enough work ahead of time to make Apple Watch 1.0 appealing enough to users to justify its price tag. Apple sold many more wearables at $350 and up than its competitors could with their own, less capable, less finished devices that were trying to sell for around $150. That's why they had no future while Apple Watch is now delivering advanced feats in Series 5. Apple Watch also laid a foundation for technology used in iPhone X.  Apple's core competency involves building out a shippable product that can justify its price Pretty clearly, Apple's core competency involves building out a shippable product that can justify its price. That's something its competitors are clearly failing to do. Some are selling larger volumes of much cheaper devices like $300 Android phones and $15 Xiaomi bands and more expensive devices in tiny quantities like the $1,600 and up folding flip phones. Neither category are achieving the kind of success Apple is regularly capturing with new releases that appear to be perfectly priced to drive the volume of sales Apple needs to both profit now and to pay for its next generation of developments. Regardless of your personal opinion of Apple, it is inarguably clear that the company is —and has been —doing the best job of financing the future of consumer technology: a combination of product design, software development, effective marketing, and global operations capable of delivering the right product at the right time at an acceptable price for a critical mass of buyers. Why would I LiDAR?In addition to leveraging its trailing position to leap competitors in volume pricing, Apple has also used exclusive, expensive new technologies to advance itself ahead of lower-priced commodity. Apple has consistently made a compelling case for the public to buy its products since iPod. It wasn't just the first model of iPhone that had people standing in line. Every year Apple's next iPhone laid out a proposition that tens of millions of regular consumers couldn't refuse. Each new iPad and Mac has achieved strong sales, and Apple has recently crafted new reasons to own Apple Watch or AirPods, entirely new product categories that barely existed before Apple turned them into mass-market success stories. Everything Apple does is not necessarily a blockbuster success, but even its marginal "hobby" projects such as Apple TV and HomePod bring in billions of dollars even in markets where its competitors are desperately giving products away tied to a surveillance advertising business model, such as Roku, Fire, Vizio, and Alexa devices. Yet, if folding displays and 5G—both of which appear to have obvious intrinsic appeal, at least on a conceptual level—can't manage to grab users' attention at the prices they currently demand, how can it be possible that Apple's foray into Augmented Reality will ever sustain itself? That's the emerging media narrative voiced by pundits who are still stridently, desperately contorting logical loopholes trying to depict Apple as potentially failing in some respect. TechCrunch recently announced that "consumers just don't see anything they want yet' in the field of AR, but that doesn't become true with mere repetition. One could say the same thing about bitmapped fonts in 1985. Apple is selling LiDAR this year as a compelling story for iPad Pro, and we expect, iPhone 12 later this year. LiDAR works similarly to the front-facing TrueDepth sensor, but rather than being optimized for the face, it allows users to scan a depth-accurate depiction of their surroundings.  Using iPad Pro LiDAR to measure body mobility The concept isn't new. A few months after Apple acquired PrimeSense in 2013, we detailed how the 3D scanning "structure sensor" technology it acquired could be used to scan models of objects. And before that, other companies that partnered with PrimeSense used the technology to power such concepts as Microsoft's Kinect for body motion tracking used in video games and "natural interaction" applications, effectively fully body gestures.  Apple acquired PrimeSense at the end of 2013 In the years since, Google dabbled with partners under Project Tango, which added a rear depth camera system to various Android phablets that failed to gain any traction in the market. As with many of the things Google does, Tango was released the way Apple did things in the 1990s: partner with various big manufactures, whip up hype about nebulous features, then release it as a tacked-on technology on otherwise unremarkable devices that can't compete with standard devices at market prices. Then after failing, simply abandon everything and allow others to benefit from your mistakes! Despite years of dabbling with a rear 3D sensor on mobile devices, Google shelved Tango just after Apple released its 1.0 vision for ARKit. Google then copied ARKit, the same way it dumped its own earlier Google Pay efforts to copy Apple Pay with Android Pay. Or the way Google dropped its initial Android efforts to deliver a new version of Android modeled closely after iOS. Rather than trying to promise everything in its first release of ARKit, Apple focused the 3D camera on the user, and released several useful, real-world features for that technology, including Animojis and Selfie Portrait mode, and later Portrait Lighting AR effects. Apple's TrueDepth implementation was not only functional and delivered some clear benefits, but was also a part of a series of other alluring features delivered in iPhone X. Apple's ability to take a single aspect of PrimeSense technology and deliver it as part of an attractive overall package resulted in a huge leap in sales and profits that helped pay for new generations of iPhone X: the less expensive, mass-market iPhone XR, the luxurious iPhone XS, and this year's pairing of iPhone 11 and its Pro counterparts. Further, the technical work Apple did to advance its ARKit frameworks—which handle things like identifying surfaces, occlusion of people, and realistic rendering effects that make augmented reality graphics blend seamlessly into camera video—continues to adapt to take advantage of new hardware like the new LiDAR. Through the AR looking glassLiDAR on the iPad Pro adds specialized hardware to perform rapid, accurate depth imaging that makes ARKit work that much better, expanding its capabilities. It's a bit like putting glasses on a genius child, who can now use their innate visual cortex functions even more efficiently and with greater clarity, unlocking new abilities to analyze the world and enjoy life. In the same fashion, LiDAR hardware makes ARKit software and the apps that use it work even better and do new things that are either less practical or not at all possible with standard camera sensors. iPad Pro LiDAR meshing of a local environment LiDAR will be an even greater game-changer on iPhone, as it will dramatically expand the installed base of LiDAR-capable users. In one year, Apple will have many tens of millions of users on LiDAR-equipped hardware, enabling a base for developers to take advantage of its features. One of the largest problems for Google's Tango was that developers had no incentive to code specifically for it, because the hardware to use it was only installed on a few niche models. There was never any realistic hope that large numbers of Android users would end up with Tango phones because the main attraction to Android is cheapness. By rapidly adding LiDAR hardware across its product line, Apple will further differentiate iOS and establish its platforms as the way to develop cutting edge AR applications empowered by LiDAR's "time of flight" sensing data. The obvious next big step for AR is shifting advanced depth imaging sensors to display computer-generated graphics on a lens you look through, rather than just mixing them with video to create a composite, "augmented" depiction of reality. With development attached to Apple's software and the unique hardware of its installed base, it will be very difficult for rivals to ship out anything comparable. It took Android makers years just to add fingerprint sensors, and during that rollout they got all sorts of things wrong. Notably, many early fingerprint sensors on Android stored that sensitive data irresponsibly in a way that malware could potentially access. The shape of your walls and the layout of objects inside your house or company office are similarly sensitive data that needs to be maintained in private. Yet Apple is alone in marketing the idea that it doesn't need to collect your data and try to make some money off of analyzing it and selling details about you. Tim Cook: "You're not our product." As with "smart" televisions that watch you, AR and LiDAR sensor data should be tied to premium hardware capable of offering you security and privacy, not just collected without any thought to where it might end up. All of these things add up to a strategy closely aligned with Apple's strategic vision for computational photography, Visual Inertial Odometry, and SLAM (Simultaneous Localization And Mapping), the technology that builds out a map of the world that your depth camera system "perceives" around you as you use it. Right now, LiDAR means instantaneous AR and object modeling. Over the next few years, expect ARKit to jump off the device display to be projected right into your vision, allowing you immediate augmented data about the world around you. And before that happens, expect Apple and its third-party partners to offer an increasing array of compelling AR apps for mobile devices. These can deliver more immersive gaming, more personal shopping—where furnishings can be virtually placed in your home, garments can be virtually draped over your body, and enhancements can be displayed over your home or landscaping—and customized mapping that provides crosstown routing, campus navigation, inside directions to an ATM, and the location of lost objects using NFC and Ultrawideband devices. Companies are already using AR in employee training and other valuable applications, and educators are seeing real value in grabbing the attention of students with AR. What's making all of this progress available is the free market for consumer devices that rewards innovation with profits. Last year's precision engineering work by Samsung to deliver its technically advanced but wildly impractical Galaxy Fold was not similarly rewarded. Google's advanced camera features for Pixel 4 and some smart ideas that went into Andy Rubin's Essential were also not rewarded. Microsoft's interesting conceptual ideas for Surface devices were not really rewarded. What was rewarded were AirPods Pro, Apple Watch, iPad, MacBooks, and to the greatest extreme iPhone 11, the most profitable device of the last season. A major aspect of iPhone 11's broad allure was its computational photography, which LiDAR has the power to further enhance in obvious ways. Apple's critics keep vilifying the company's profitability as if they're trying to make the case that being paid for hard work is immoral. That's absurd and it's wrong. Apple is only currently performing well commercially because it is doing a better job at delivering better technology in a better package at the right time at an acceptable cost to consumers. Apple doesn't have an enforceable monopoly on phones or wearables or tablets or earbuds. If its competitors were also doing a better job, they'd also be similarly profitable. But they are not and Apple is. LiDAR is the latest evidence of Apple's competency in delivering, not just announcing, advanced technology at the right price. |

| Xenoblade Chronicles Definitive Edition - Nintendo Direct Mini 3.26.20 - Nintendo Switch - Nintendo Posted: 26 Mar 2020 07:15 AM PDT [unable to retrieve full-text content]

|

| You are subscribed to email updates from Technology - Latest - Google News. To stop receiving these emails, you may unsubscribe now. | Email delivery powered by Google |

| Google, 1600 Amphitheatre Parkway, Mountain View, CA 94043, United States | |

This post have 0 komentar

EmoticonEmoticon